Anthropic MCP: Why They Created It and How to Use It

TL;DR: Anthropic created MCP to solve the N x M integration problem. Open-sourced it for industry-wide safety standards. Now adopted by OpenAI, Google, Microsoft. Governed by Linux Foundation as of December 2025.

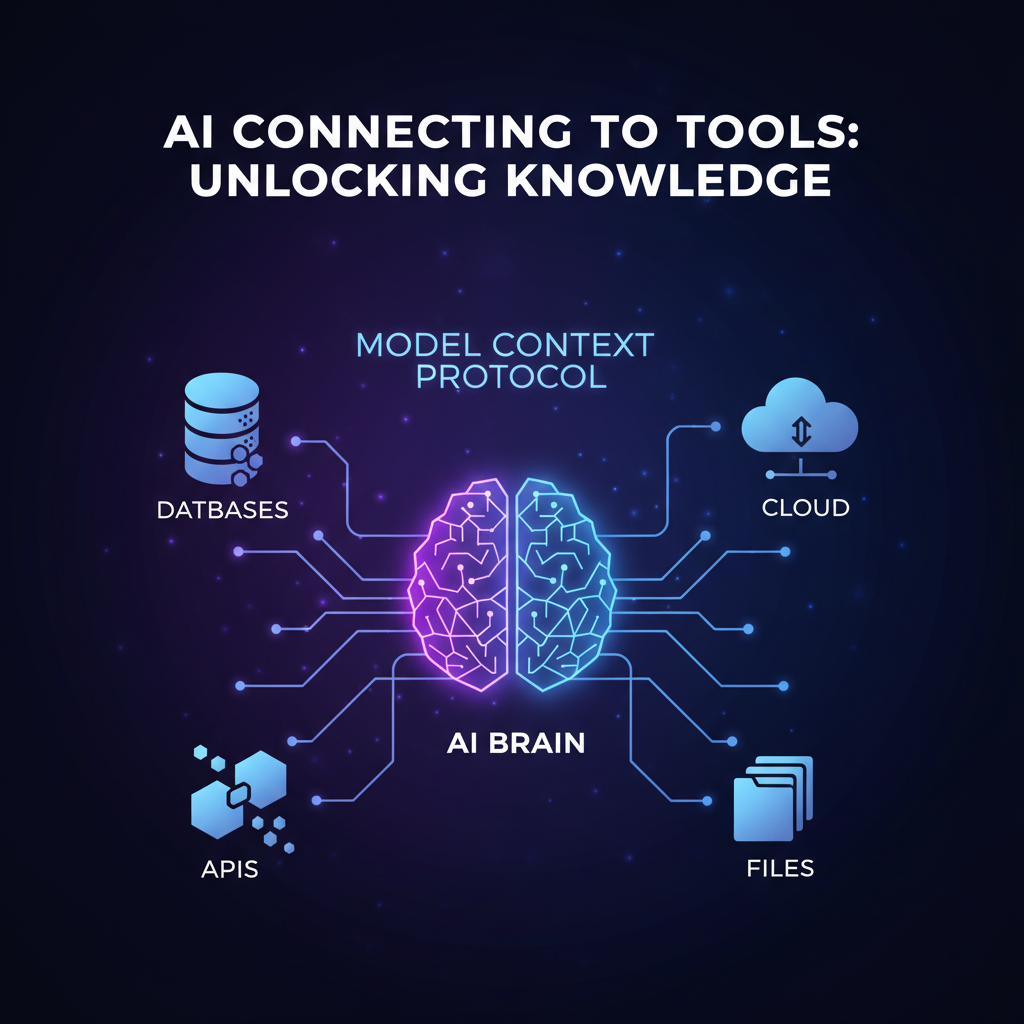

In November 2024, Anthropic faced a paradox. Claude could write code, analyze documents, debug complex systems — but only if you copy-pasted everything into the chat. Ask Claude to check your GitHub repo? "I don't have access to that." Query your database? "Please share the data."

AI was brilliant but blind.

Anthropic MCP changed that. One year later, it's the universal standard for connecting AI to the real world — adopted by OpenAI, Google, Microsoft, and over 6,800 tools.

The Problem: N×M Integrations#

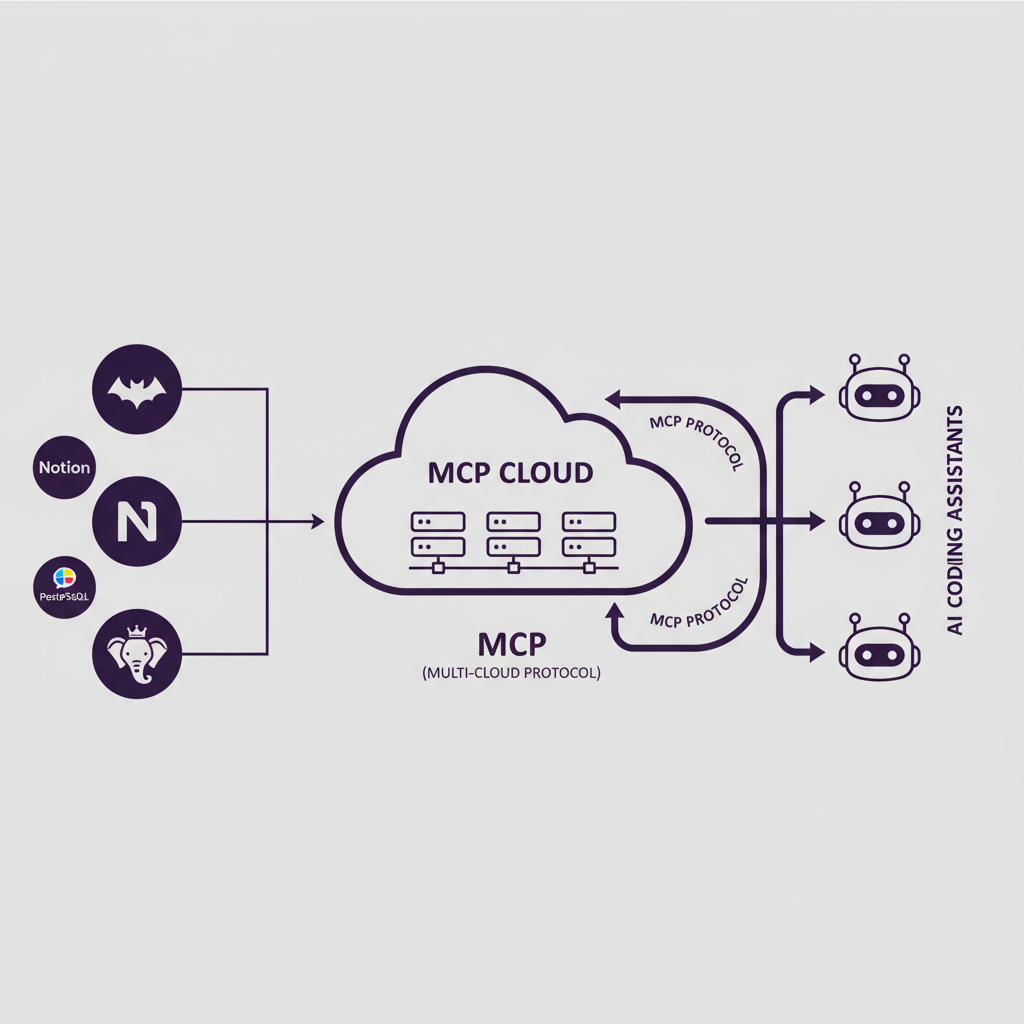

Before MCP, every AI-tool connection required custom code.

| Scenario | Integrations Needed |

|---|---|

| 5 AI apps × 10 tools | 50 custom implementations |

| 10 AI apps × 20 tools | 200 custom implementations |

| Industry scale | Thousands of duplicated efforts |

Each tool had different APIs, authentication, data formats. Building integrations was a full-time job.

Anthropic's insight: Create one standard protocol. Every AI implements it once. Every tool implements it once. Everything just works.

| With MCP | Result |

|---|---|

| 5 AI clients + 10 servers | 15 implementations total |

| Any new AI client | Works with all existing servers |

| Any new server | Works with all existing AI clients |

Why Open Source?#

Anthropic could have kept MCP proprietary — a competitive moat for Claude. Instead, they released it under MIT license on day one.

Their reasoning:

- Safety through standardization — Consistent security models across all AI

- Network effects — More servers = more value for everyone

- Industry trust — Open protocols get adopted faster

The bet paid off spectacularly:

Timeline:

- March 2025 — OpenAI adopts MCP for ChatGPT Desktop

- May 2025 — Google announces MCP support at I/O 2025

- May 2025 — Microsoft integrates into Windows 11 at Build 2025

- December 2025 — MCP moves to Linux Foundation (AAIF)

The Ecosystem Today#

Who Uses Anthropic MCP?#

| Company | How They Use It |

|---|---|

| Anthropic | Claude Desktop, Claude Code — native MCP support |

| OpenAI | ChatGPT Desktop, Agents SDK |

| Gemini, managed servers for Maps/BigQuery | |

| Microsoft | VS Code Copilot, Windows 11 integration |

| Amazon | AWS AI services, Bedrock integration |

The protocol Anthropic created is now an industry standard.

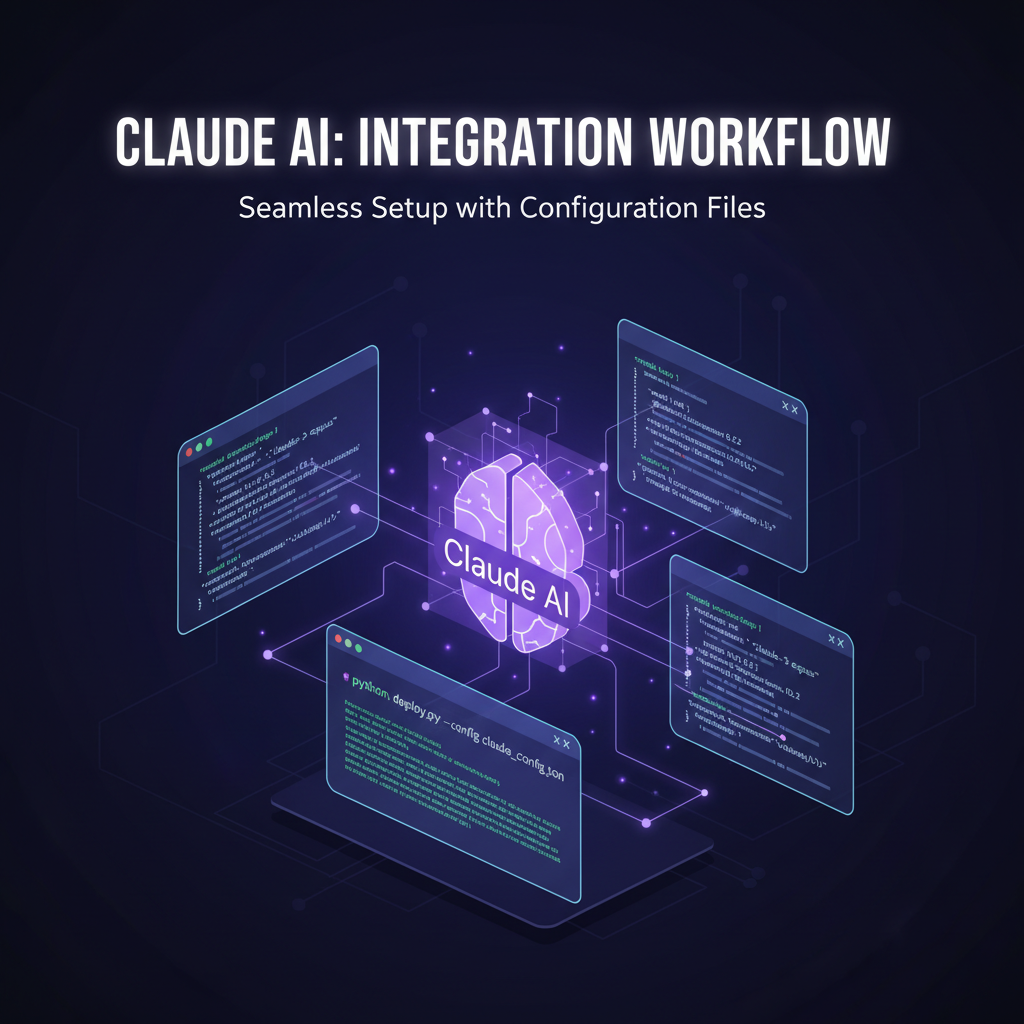

Using MCP with Claude#

Getting started takes under 2 minutes.

Claude Desktop#

Add servers to your config file at ~/Library/Application Support/Claude/claude_desktop_config.json:

{

"mcpServers": {

"filesystem": {

"command": "npx",

"args": ["-y", "@modelcontextprotocol/server-filesystem", "/Users/you/projects"]

}

}

}Restart Claude. Now ask: "What files are in my projects folder?"

Claude Code (CLI)#

claude mcp add filesystem -- npx -y @modelcontextprotocol/server-filesystem ~/projectsOne command. Instant access to your files.

Anthropic's Official Servers#

Anthropic maintains reference implementations for common use cases:

| Server | What It Does |

|---|---|

| Filesystem | Read/write local files |

| Memory | Persistent knowledge storage |

| Git | Local repository operations |

| PostgreSQL | Database queries |

| Puppeteer | Browser automation |

| Fetch | HTTP requests |

All available via npx @modelcontextprotocol/server-{name}.

Security by Design#

Anthropic designed MCP with enterprise security requirements:

Permission Model — Servers declare capabilities. Hosts can restrict access. You control what AI can do.

OAuth 2.1 — Remote servers require proper authentication (standardized June 2025).

Content Sanitization — Untrusted content is marked to prevent prompt injection attacks.

Local First — Most servers run on your machine. Your data stays private.

The principle: AI should have exactly the access you grant — no more.

MCP vs Alternatives#

| Feature | Anthropic MCP | OpenAI Functions | Custom APIs |

|---|---|---|---|

| Scope | Universal | OpenAI only | Per-service |

| Discovery | Automatic | Manual | Manual |

| Session State | Maintained | Stateless | Varies |

| Ecosystem | 6,800+ servers | OpenAI tools | Custom |

| Governance | Linux Foundation | OpenAI | Vendor |

Key difference: OpenAI functions work with OpenAI. MCP works with everyone — including OpenAI, which adopted MCP in March 2025.

What's Next#

Features in development:

| Feature | Description |

|---|---|

| Sampling | Servers can request AI completions |

| Elicitation | Servers can ask users questions |

| Multimodal | Image, audio, video context |

| Agent Orchestration | Multi-agent workflows |

Linux Foundation Governance#

MCP moved to the Agentic AI Foundation (AAIF) in December 2025. Founding members include Anthropic, OpenAI, Google, Microsoft, AWS, Cloudflare, and Bloomberg.

This ensures long-term neutrality and industry-wide standards.

Frequently Asked Questions#

What is Anthropic MCP?#

The Model Context Protocol — an open standard created by Anthropic for connecting AI to external tools. Now an industry standard governed by the Linux Foundation.

Is MCP only for Claude?#

No. While Anthropic created it, OpenAI, Google, and Microsoft all support MCP. Any AI can implement the protocol.

Is it free to use?#

Yes. MCP is open source under MIT license. Free to use, modify, and build upon.

Why did Anthropic open source it?#

Anthropic believes AI safety requires industry-wide standards. Open sourcing MCP enables consistent security models across all platforms.

How is MCP different from regular APIs?#

APIs require custom integration per service. MCP provides automatic discovery — AI can use new tools without you writing code.

Start Using MCP Today#

Anthropic MCP transformed how AI connects to the world. From a single company's solution to an industry standard in 13 months.

Next steps:

- Try it — Add a server to Claude Desktop

- Explore — Browse 6,800+ servers

- Build — Create your own with Python or TypeScript

Related: What is MCP? | Claude MCP Setup Guide | MCP Protocol Deep Dive